How Do I Register A Seond Hand Set For A Vtech Sn6187

Agreement LSTM Networks

Posted on August 27, 2015

Recurrent Neural Networks

Humans don't starting time their thinking from scratch every second. As yous read this essay, you empathize each word based on your understanding of previous words. Yous don't throw everything away and start thinking from scratch again. Your thoughts have persistence.

Traditional neural networks can't exercise this, and it seems like a major shortcoming. For instance, imagine you want to classify what kind of consequence is happening at every point in a movie. It'south unclear how a traditional neural network could use its reasoning about previous events in the motion-picture show to inform later ones.

Recurrent neural networks accost this issue. They are networks with loops in them, allowing information to persist.

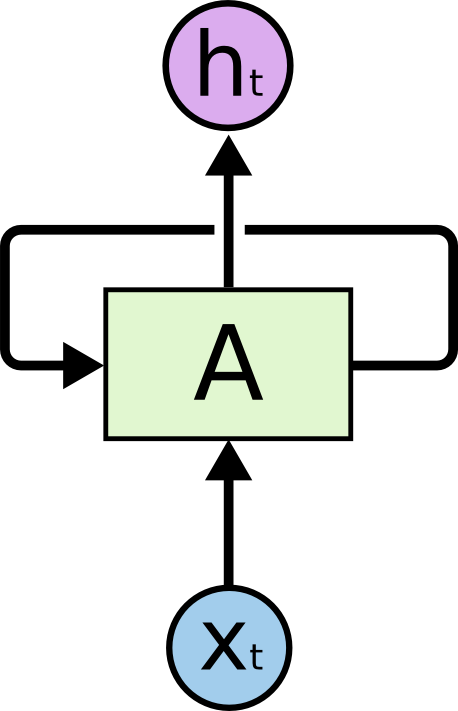

Recurrent Neural Networks have loops.

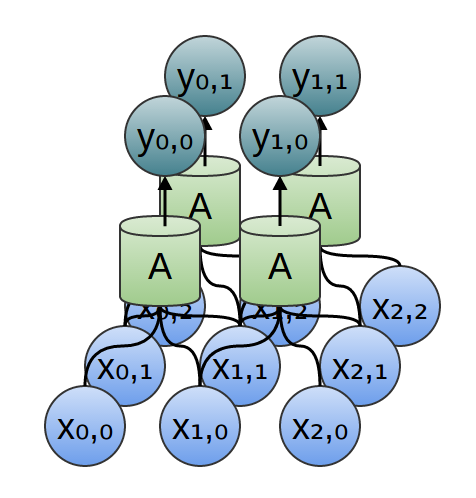

In the to a higher place diagram, a clamper of neural network, \(A\), looks at some input \(x_t\) and outputs a value \(h_t\). A loop allows data to be passed from ane step of the network to the next.

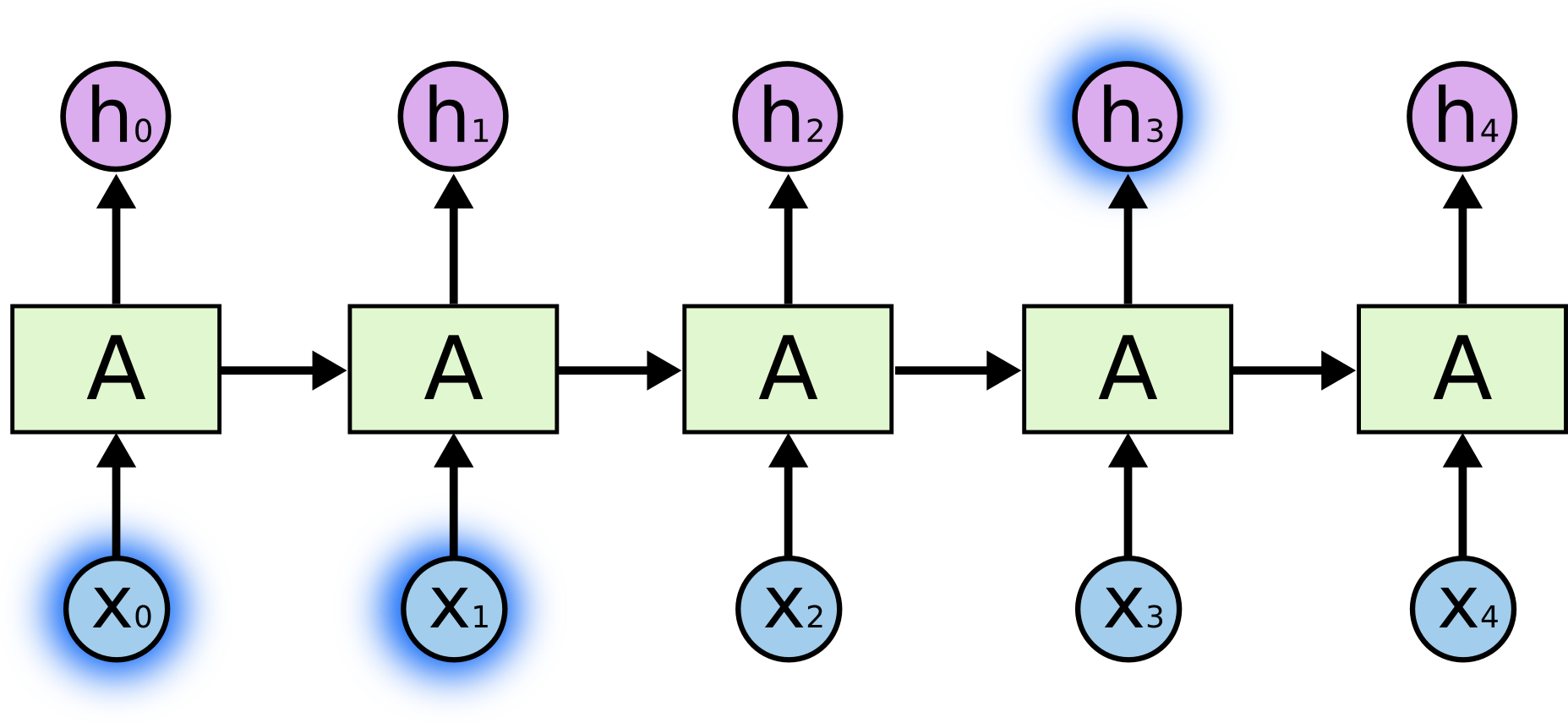

These loops brand recurrent neural networks seem kind of mysterious. However, if you think a chip more, information technology turns out that they aren't all that different than a normal neural network. A recurrent neural network tin be thought of as multiple copies of the same network, each passing a message to a successor. Consider what happens if we unroll the loop:

An unrolled recurrent neural network.

This chain-like nature reveals that recurrent neural networks are intimately related to sequences and lists. They're the natural architecture of neural network to use for such data.

And they certainly are used! In the final few years, there have been incredible success applying RNNs to a variety of problems: speech recognition, language modeling, translation, epitome captioning… The listing goes on. I'll leave give-and-take of the astonishing feats one tin achieve with RNNs to Andrej Karpathy'due south excellent weblog post, The Unreasonable Effectiveness of Recurrent Neural Networks. Merely they really are pretty amazing.

Essential to these successes is the use of "LSTMs," a very special kind of recurrent neural network which works, for many tasks, much much better than the standard version. Nearly all heady results based on recurrent neural networks are achieved with them. It'south these LSTMs that this essay volition explore.

The Problem of Long-Term Dependencies

One of the appeals of RNNs is the idea that they might be able to connect previous information to the present task, such as using previous video frames might inform the understanding of the nowadays frame. If RNNs could do this, they'd be extremely useful. But tin they? Information technology depends.

Sometimes, nosotros only demand to look at recent data to perform the present chore. For example, consider a language model trying to predict the next word based on the previous ones. If we are trying to predict the last give-and-take in "the clouds are in the sky," we don't demand any further context – information technology'south pretty obvious the side by side word is going to be heaven. In such cases, where the gap between the relevant information and the identify that it'south needed is minor, RNNs can acquire to use the past information.

But there are also cases where nosotros demand more context. Consider trying to predict the concluding word in the text "I grew upwards in France… I speak fluent French." Contempo data suggests that the next word is probably the name of a language, but if we want to narrow down which language, we need the context of France, from further back. Information technology'southward entirely possible for the gap between the relevant data and the signal where it is needed to become very big.

Unfortunately, every bit that gap grows, RNNs become unable to learn to connect the information.

In theory, RNNs are absolutely capable of handling such "long-term dependencies." A homo could carefully pick parameters for them to solve toy problems of this form. Sadly, in practice, RNNs don't seem to be able to learn them. The trouble was explored in depth by Hochreiter (1991) [German] and Bengio, et al. (1994), who institute some pretty key reasons why it might be hard.

Thankfully, LSTMs don't take this problem!

LSTM Networks

Long Short Term Memory networks – usually just called "LSTMs" – are a special kind of RNN, capable of learning long-term dependencies. They were introduced by Hochreiter & Schmidhuber (1997), and were refined and popularized by many people in post-obit work.1 They work tremendously well on a large multifariousness of problems, and are at present widely used.

LSTMs are explicitly designed to avoid the long-term dependency problem. Remembering information for long periods of time is practically their default beliefs, non something they struggle to learn!

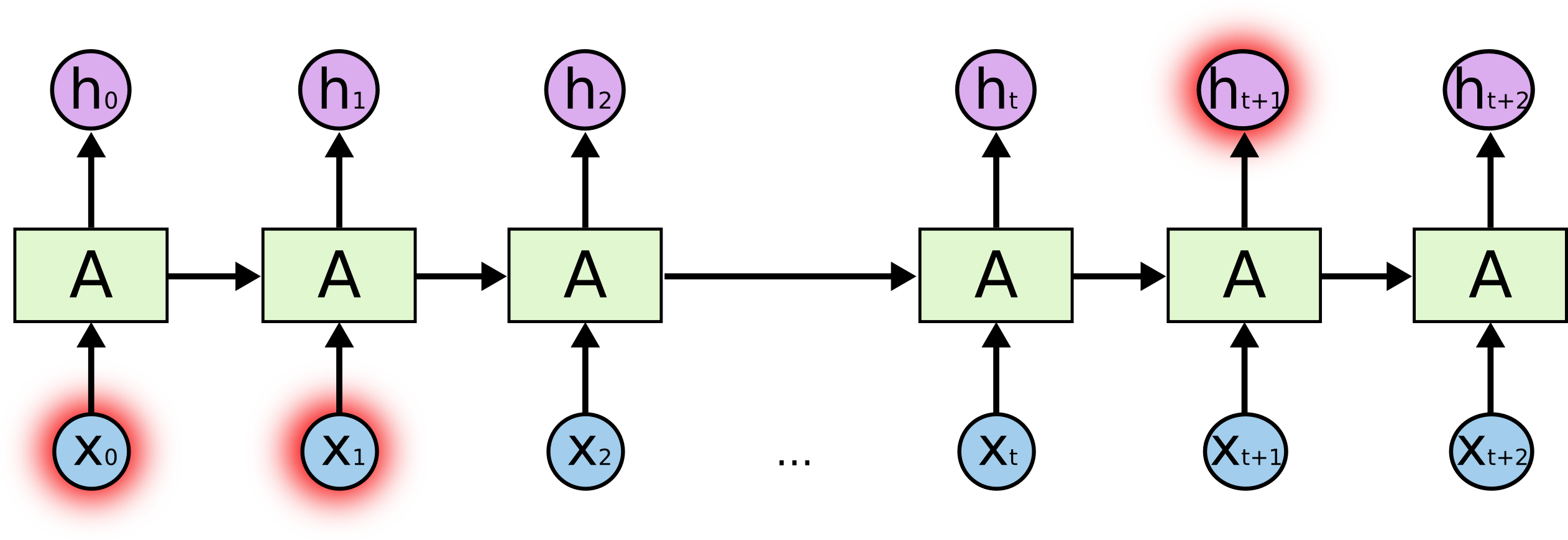

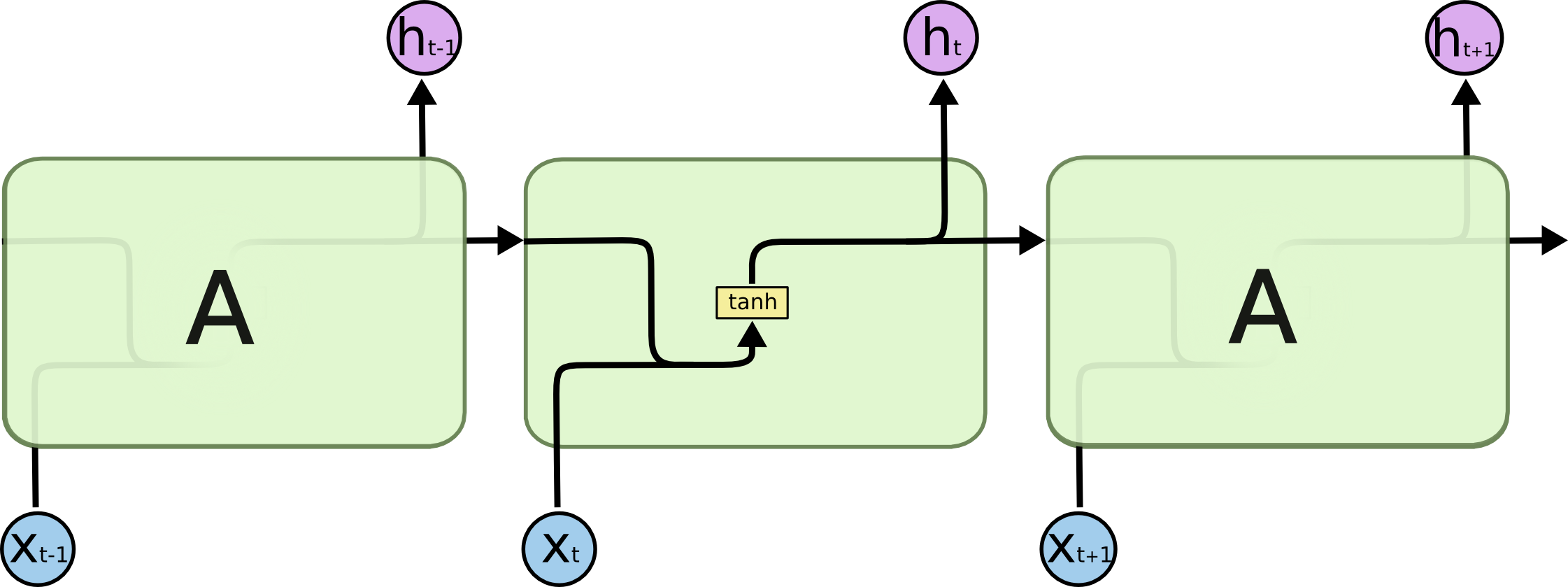

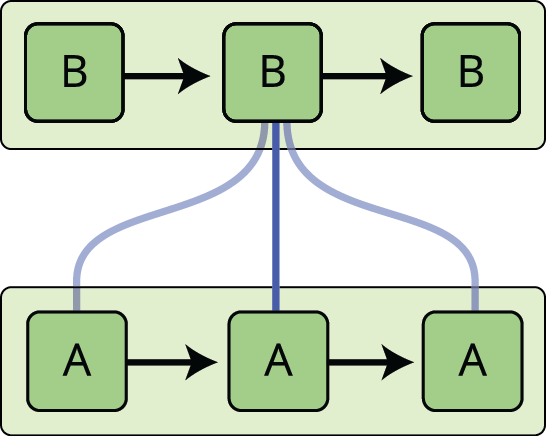

All recurrent neural networks take the form of a chain of repeating modules of neural network. In standard RNNs, this repeating module will accept a very uncomplicated structure, such as a single tanh layer.

The repeating module in a standard RNN contains a single layer.

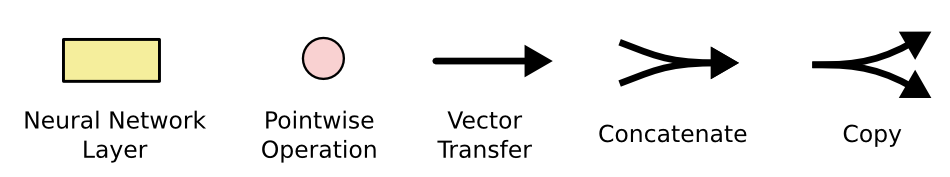

LSTMs also have this chain like construction, but the repeating module has a dissimilar structure. Instead of having a single neural network layer, there are four, interacting in a very special way.

The repeating module in an LSTM contains four interacting layers.

Don't worry near the details of what's going on. We'll walk through the LSTM diagram step by footstep later. For at present, permit'southward just attempt to get comfortable with the annotation we'll be using.

In the in a higher place diagram, each line carries an entire vector, from the output of one node to the inputs of others. The pinkish circles represent pointwise operations, like vector improver, while the yellow boxes are learned neural network layers. Lines merging denote chain, while a line forking announce its content being copied and the copies going to different locations.

The Core Thought Behind LSTMs

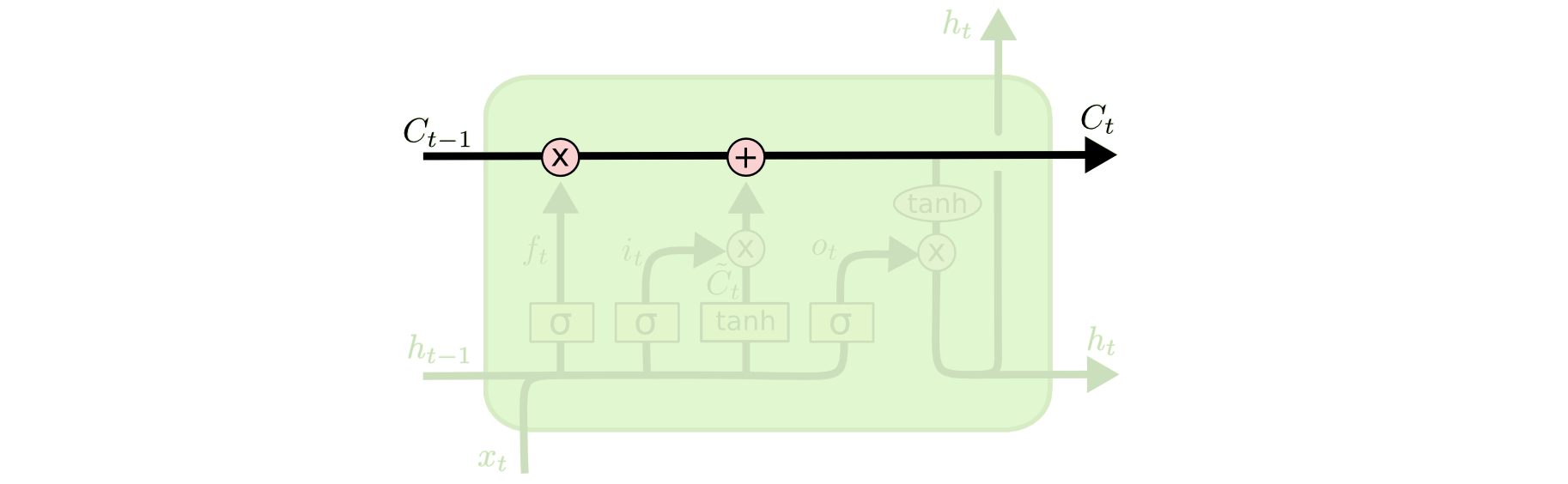

The primal to LSTMs is the cell state, the horizontal line running through the top of the diagram.

The jail cell state is kind of like a conveyor belt. It runs direct down the unabridged chain, with only some pocket-size linear interactions. It's very piece of cake for information to simply catamenia forth it unchanged.

The LSTM does have the ability to remove or add together information to the cell land, carefully regulated by structures called gates.

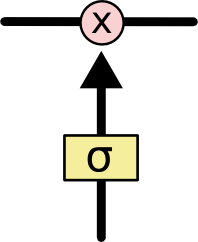

Gates are a way to optionally let information through. They are composed out of a sigmoid neural cyberspace layer and a pointwise multiplication operation.

The sigmoid layer outputs numbers betwixt zero and i, describing how much of each component should be allow through. A value of zero means "permit nothing through," while a value of one means "let everything through!"

An LSTM has three of these gates, to protect and control the cell state.

Step-by-Pace LSTM Walk Through

The first pace in our LSTM is to decide what information we're going to throw away from the prison cell state. This decision is made by a sigmoid layer chosen the "forget gate layer." It looks at \(h_{t-1}\) and \(x_t\), and outputs a number between \(0\) and \(1\) for each number in the cell state \(C_{t-1}\). A \(1\) represents "completely go along this" while a \(0\) represents "completely get rid of this."

Let'southward go dorsum to our instance of a language model trying to predict the side by side word based on all the previous ones. In such a problem, the cell state might include the gender of the present subject field, so that the correct pronouns tin be used. When we see a new subject, we want to forget the gender of the old field of study.

The next stride is to decide what new information we're going to store in the jail cell state. This has 2 parts. Offset, a sigmoid layer called the "input gate layer" decides which values we'll update. Next, a tanh layer creates a vector of new candidate values, \(\tilde{C}_t\), that could exist added to the state. In the next step, we'll combine these two to create an update to the state.

In the case of our language model, we'd want to add the gender of the new subject to the jail cell state, to supervene upon the onetime one we're forgetting.

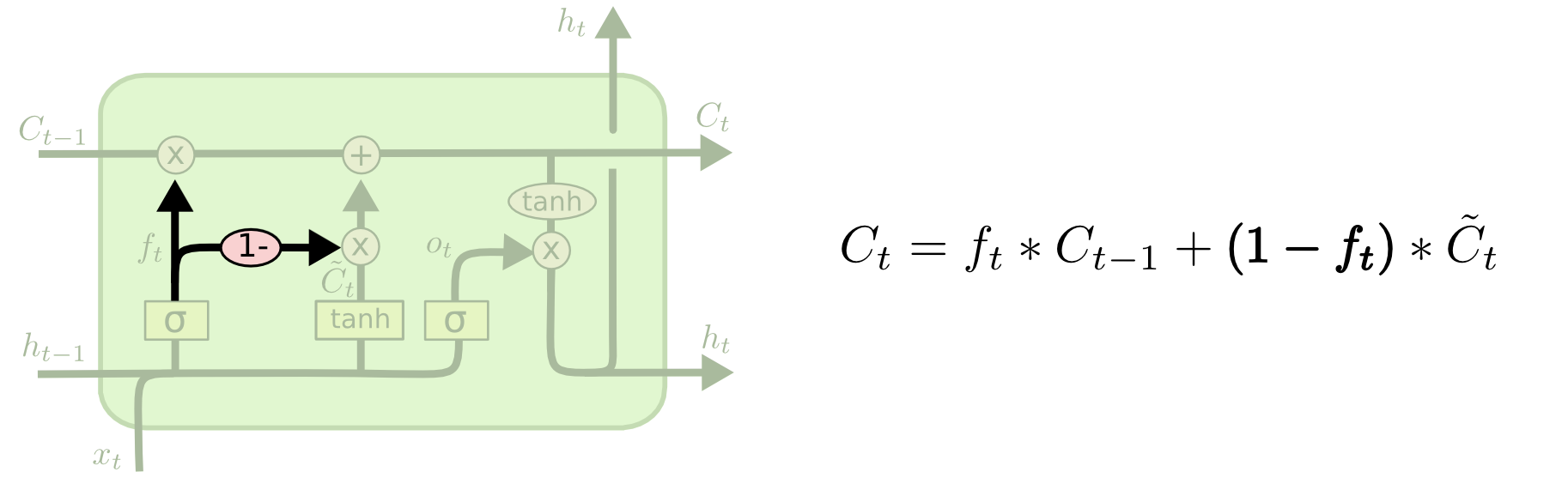

It'southward at present time to update the onetime jail cell state, \(C_{t-one}\), into the new cell land \(C_t\). The previous steps already decided what to do, nosotros merely need to actually practise information technology.

Nosotros multiply the former state past \(f_t\), forgetting the things we decided to forget earlier. And so we add \(i_t*\tilde{C}_t\). This is the new candidate values, scaled by how much we decided to update each country value.

In the case of the linguistic communication model, this is where nosotros'd actually drop the information about the old subject's gender and add together the new information, every bit we decided in the previous steps.

Finally, nosotros demand to decide what nosotros're going to output. This output will be based on our cell state, but will be a filtered version. Beginning, we run a sigmoid layer which decides what parts of the cell state nosotros're going to output. Then, we put the cell state through \(\tanh\) (to push the values to be between \(-1\) and \(1\)) and multiply information technology by the output of the sigmoid gate, then that nosotros only output the parts we decided to.

For the linguistic communication model example, since it just saw a subject, it might want to output information relevant to a verb, in case that's what is coming next. For example, information technology might output whether the subject is atypical or plural, so that we know what form a verb should be conjugated into if that's what follows next.

Variants on Long Short Term Memory

What I've described so far is a pretty normal LSTM. But non all LSTMs are the same as the higher up. In fact, it seems like almost every paper involving LSTMs uses a slightly unlike version. The differences are minor, but it'due south worth mentioning some of them.

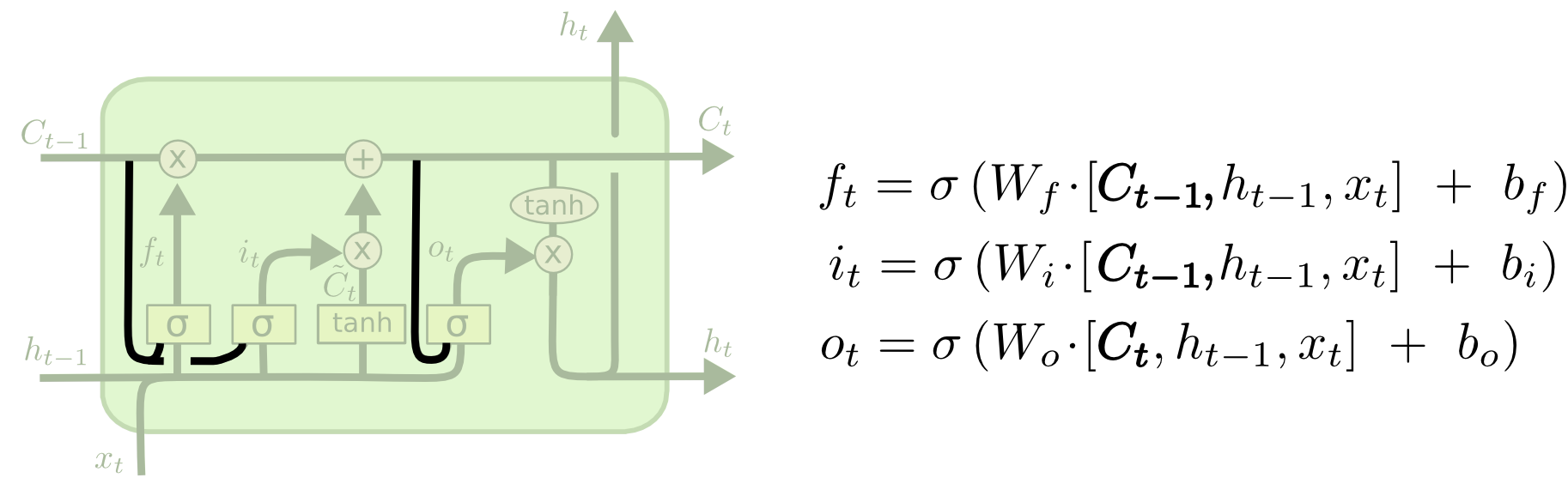

Ane pop LSTM variant, introduced by Gers & Schmidhuber (2000), is adding "peephole connections." This means that we let the gate layers look at the prison cell state.

The above diagram adds peepholes to all the gates, but many papers will give some peepholes and not others.

Some other variation is to use coupled forget and input gates. Instead of separately deciding what to forget and what nosotros should add new information to, we make those decisions together. We but forget when nosotros're going to input something in its place. We only input new values to the state when nosotros forget something older.

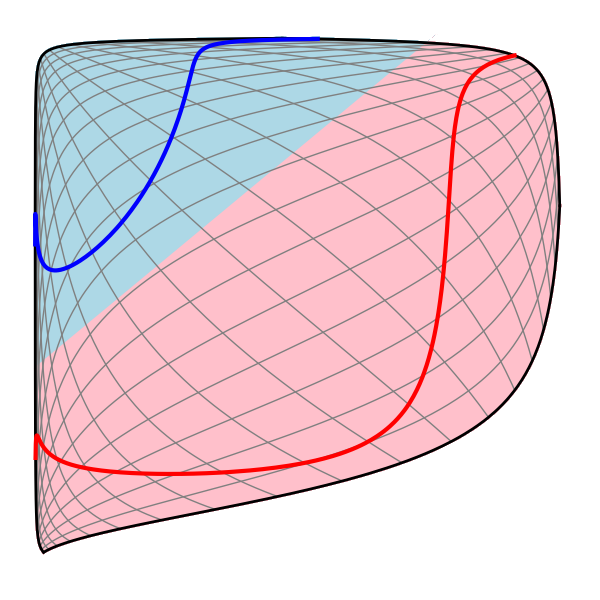

A slightly more dramatic variation on the LSTM is the Gated Recurrent Unit, or GRU, introduced by Cho, et al. (2014). Information technology combines the forget and input gates into a single "update gate." It also merges the cell land and subconscious state, and makes some other changes. The resulting model is simpler than standard LSTM models, and has been growing increasingly popular.

These are but a few of the most notable LSTM variants. There are lots of others, similar Depth Gated RNNs by Yao, et al. (2015). There's also some completely different approach to tackling long-term dependencies, like Clockwork RNNs past Koutnik, et al. (2014).

Which of these variants is best? Exercise the differences matter? Greff, et al. (2015) do a overnice comparison of popular variants, finding that they're all about the aforementioned. Jozefowicz, et al. (2015) tested more than than ten yard RNN architectures, finding some that worked better than LSTMs on sure tasks.

Conclusion

Earlier, I mentioned the remarkable results people are achieving with RNNs. Essentially all of these are achieved using LSTMs. They really piece of work a lot better for about tasks!

Written downward as a ready of equations, LSTMs wait pretty intimidating. Hopefully, walking through them footstep past step in this essay has made them a scrap more approachable.

LSTMs were a big step in what we can accomplish with RNNs. It's natural to wonder: is there another big step? A common opinion among researchers is: "Yep! There is a side by side step and information technology'due south attention!" The idea is to let every pace of an RNN selection information to look at from some larger drove of information. For case, if y'all are using an RNN to create a explanation describing an image, information technology might pick a function of the image to expect at for every word it outputs. In fact, Xu, et al. (2015) do exactly this – information technology might exist a fun starting signal if you want to explore attending! In that location's been a number of really exciting results using attention, and it seems similar a lot more are around the corner…

Attending isn't the only exciting thread in RNN research. For example, Grid LSTMs by Kalchbrenner, et al. (2015) seem extremely promising. Work using RNNs in generative models – such every bit Gregor, et al. (2015), Chung, et al. (2015), or Bayer & Osendorfer (2015) – too seems very interesting. The last few years take been an exciting fourth dimension for recurrent neural networks, and the coming ones promise to merely be more so!

Acknowledgments

I'k grateful to a number of people for helping me amend sympathise LSTMs, commenting on the visualizations, and providing feedback on this post.

I'm very grateful to my colleagues at Google for their helpful feedback, especially Oriol Vinyals, Greg Corrado, Jon Shlens, Luke Vilnis, and Ilya Sutskever. I'm besides thankful to many other friends and colleagues for taking the time to help me, including Dario Amodei, and Jacob Steinhardt. I'm especially thankful to Kyunghyun Cho for extremely thoughtful correspondence about my diagrams.

Before this postal service, I practiced explaining LSTMs during two seminar series I taught on neural networks. Thanks to everyone who participated in those for their patience with me, and for their feedback.

-

In addition to the original authors, a lot of people contributed to the modern LSTM. A non-comprehensive list is: Felix Gers, Fred Cummins, Santiago Fernandez, Justin Bayer, Daan Wierstra, Julian Togelius, Faustino Gomez, Matteo Gagliolo, and Alex Graves.↩

More Posts

Attention and Augmented Recurrent Neural Networks

On Dribble

Conv Nets

A Modular Perspective

Neural Networks, Manifolds, and Topology

Deep Learning, NLP, and Representations

Source: https://colah.github.io/posts/2015-08-Understanding-LSTMs/

Posted by: hooperseliesser.blogspot.com

0 Response to "How Do I Register A Seond Hand Set For A Vtech Sn6187"

Post a Comment